Home > IA ACT compliance: deploy your AIS in compliance with IA ACT constraints

With the IA Act, the European Union is imposing new obligations to guarantee the transparency, security and compliance of AI systems. Netsystem can help you navigate these complex regulations and ensure effective, long-term compliance.

The Artificial Intelligence Act (AI Act) is a European Union legislative initiative aimed at regulating the development and use of artificial intelligence within the European market. Its main objective is to ensure that AI systems respect the fundamental values of the EU, in particular fundamental rights, security and transparency.

The regulation classifies AI systems according to their level of risk, ranging from low to unacceptable. High-risk systems are subject to strict requirements, such as compliance assessments, transparency obligations and human oversight mechanisms. This approach aims to minimise potential risks while encouraging responsible innovation.

The AI Act provides for measures to strengthen public confidence in AI technologies, by imposing quality standards and ensuring ongoing monitoring of the systems deployed. The aim is to position Europe as a world leader in ethical and reliable AI, while protecting citizens from potentially harmful uses of these technologies.

The IA Act will only come into force gradually, but certain obligations can already be anticipated.

From mapping to the development of an action plan, we support you in the deployment of your new AI projects (compliance by design) and in bringing your existing AI projects into compliance (compliance by default).

Launch and awareness meeting

Mapping of AI systems

AI risk analysis and RGPD audit

Drawing up an AI compliance action plan

Our outsourced DPO expertise enables us to ensure 360° compliance of your AI systems with the implementation of AI & Privacy governance.

Every time you think about deploying an AIS, it is essential to carry out an analysis to measure the level of risk of the project and decide whether to abandon it or put it into production.

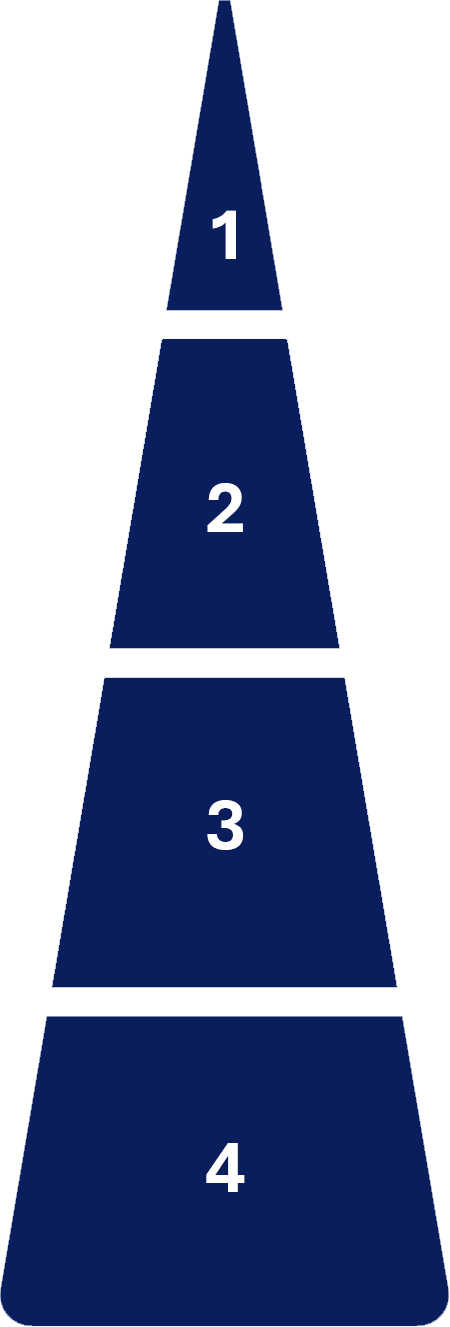

AI legislation defines 4 levels of risk for AI systems:

1. UNACCEPTABLE RISK

AI systems that run counter to EU values and infringe fundamental rights.

=> Project abandoned

2. HIGH RISK

AI systems deployed in high-risk products, services or sectors defined by the regulation.

=> Compliance in 10 steps

3. LOW RISK

AI systems that interact with individuals and do not present a high risk.

=> Transparency and impact assessment

4. MINIMUM RISK

AI systems that do not fall into the other categories.

=> Drafting of a code of conduct

We know that every organisation is unique. Our methodology is tailored to your specific needs, whether it’s an initial audit, an update of your systems’ compliance with the IA ACT or a compliance analysis of an AIS under consideration.

To find out more about our support services, please contact us. We’re here to help you secure your digital journey and turn the challenges of artificial intelligence into real opportunities for growth.

No posts found!